Introduction

This page details the intended procedure of rolling out 64-bit Debian 7.0 (aka “wheezy”) on my network, along with issues along way and indications of what has already and what still has to be completed.

The project was completed 21 June 2013. Some activities remain to be done (see ‘Future Plans’ below) but these will be documented elsewhere or raised as self-addressed tickets.

Overview

The combined test and rollout plan is given below; its steps are described in detail either below or on separate pages.

-

- (done) test basic wheezy installation (see below)

- (done) test XtreemFS on wheezy (see below)

- (done) test cLVM over DRBD and OpenAIS on wheezy (see below)

- (done) test OCFS2 over DRBD on wheezy (see below)

- (done) test Fritz!Box with USB volumes (see below)

- (done) add wheezy support to PDI (see below)

- (done) test pacemaker with Meatware (see below)

- (done) test clustered DNS, clustered DHCP, clustered NIS (see below)

- (done) decide hardware configuration for VM/login servers (see below)

- (done) await release of wheezy

- (done) migrate all VMs from fiori to torchio

- (done) shutdown all storage pools on fiori

- (done) split the drbd mirrors between torchio and fiori

- (done) remove old 320GB disk from fiori (and put aside)

- (done) put a 4TB disk in fiori

- (done) rename the old fiori wiki page and start a new one

- (done) install fiori with wheezy, configuring it as a login server (see fiori)

- (done) configure fiori as a storage server (see fiori and Configuring storage services)

- (done) configure fiori as a NIS master, DNS server, DHCP server (see fiori, Configuring NIS services, Configuring DNS services and Configuring DHCP services)

- (done) configure fiori storage space (see fiori and Configuring storage services)

- (done) migrate data onto it (see fiori)

- (cancelled) physically swap fiori and torchio (installation of fiori was quick enough to make this step redundant)

- (done) repeat for torchio (and document at [see “torchio”], etc)

- (done) on macaroni turn off those services that are now running elsewhere; i.e. DNS, DHCP, NIS, NTP, DYNDNS

- (done) reinstall fiori so that it is totally clean

- (done) reinstall torchio so that it is totally clean

- (done) tidy documentation (see links leading from fiori and torchio)

Test basic wheezy installation

- (done) on gnocchi, use paa to register the host and set up indirects and shares.

- (failed) Install a VM using virt-manager with wheezy; this failed because i18n stuff is now required to be in the repository and I don’t have that

- (done) Fix my repository:

- (done) Grep paa sources for –getcontents’ and add ‘–i18n’ close by

- (done) Update my paa config to add ‘–i18n’

- (done) Quick fix to my current wheezy mirror and freeze

- (done) Use virt-manager to create storage pools for accessing ISOs and for accessing the scratch directory where I will create VM images

- (failed) Use virt-manager to install a wheezy VM; deviations from default answers to questions were as follows:

- source of packages: http://install.pasta.net/debian-wheezy.<hostname>

- deselect all package groups

- (done) Urgent post-installation steps:

- (done) Run:

apt-get install openssh-server subversion less vim nfs-common rsync apt-file reportbug w3m

- (failed) Run:

apt-file update

Output was:

root@orzo:# apt-file update Ignoring source without Contents File: http://install.pasta.net/debian-wheezy.orzo/dists/wheezy/main/Contents-amd64.gz Ignoring source without Contents File: http://security.debian.org/dists/wheezy/updates/main/Contents-amd64.gz root@orzo:#

- (done) Investigate the causes of the above failure: it is caused by BTS#637442.

- (failed) Work around above problem: the debmirror command has --include=<regex> option, which should help here; it did not help. Thankfully this is only preventing apt-file from working properly.

- (done) As per replies to my bug update, install the squeeze backport of the latest debmirror by running:

echo "deb http://ftp2.de.debian.org/debian-backports squeeze-backports main" > /etc/apt/sources.list.d/backports.list apt-get update apt-get -t squeeze-backports install debmirror

- (done) Remirror and refreeze wheezy, using the new debmirror.

- (failed) Re-run:

apt-file update

Output was the same.

- (pending) Discussion continues on BTS#637442.

- (done) Run:

- (done) Manually configure NIS, DNS and automounter.

Test XtreemFS on wheezy

- (failed) Install xtreemfs deb on wheezy; prerequisites not satisfiable, neither from xtreefs squeeze repo or any recent ubuntu repo

- (done) Download latest xtreemfs sources (1.4)

- (failed) Install prerequisites; the upstream repos have a permissions issue which prevents download.

- (done) Fix permissions by:

- (done) adding --chmod=u=rw,go=r,D+x to debfoster’s rsync options

- (done) remirror and refreeze (shortcut)

- (done) on orzo try:

apt-get update apt-get install openjdk-7-jdk

- (done) if that worked then apply the same options to other repos and to the example config files

- (failed) Compile xtreemfs; the list of dependencies is horrendous.

- (failed) Test XtreemFS; the documentation states:

Please keep in mind that the majority of two replicas is two, i.e. using WqRq with two replicas will provide you no availability in case of a replica failure. Use the WqRq policy only if you have at least 3 OSDs - otherwise select the WqR1 policy.

This suggests to me that if one node of a two node cluster dies, then the other node can continue to read, but cannot write anything! This is no good for home directories.

Test cLVM over DRBD and OpenAIS on wheezy

- (done) Do two basic wheezy installations (orzo and borzo)

- (done) Add a second volume to both

- (done) Configure DRBD access to the volume by running:

# on both nodes dd if=/dev/zero bs=64M of=/dev/vdb & # on both nodes cat > /etc/drbd.d/drbd0.res <<EOF resource drbd0 { protocol C; device /dev/drbd0 minor 0; meta-disk internal; on orzo { address 192.168.1.19:7790; disk /dev/vdb; } on borzo { address 192.168.1.20:7790; disk /dev/vdb; } } EOF # on both nodes wait # on both nodes drbdadm -- --force create-md drbd0 # on both nodes drbdadm up drbd0 # on one node only drbdadm -- --clear-bitmap new-current-uuid drbd0 # on both nodes drbdadm primary drbd0 - (done) Configure OpenAIS (see [MDI pdi]’s configure_cluster_node() function and Configuring cluster services)

- (suspended) Configure cLVM; currently blocked by BTS#697676; although this bug report says the bug is fixed, the packages have not made it to the mirrors yet.

- (suspended) Continue configuring cLVM; although BTS#563320 has been fixed and clvm is now compiled against Corosync/OpenAIS, Corosync/OpenAIS are in state of flux and not much seems to have made it into wheezy

- (failed) Continue configuring cLVM; cLVM+Corosync/OpenAIS must wait until next Debian release – it seems too unstable.

Test OCFS2 over DRBD on wheezy

- (done) Do two basic wheezy installations (orzo and borzo)

- (done) Add a second volume to both

- (done) Configure DRBD access to the volume by running:

# on both nodes apt-get install drbd8-utils dd if=/dev/zero bs=64M of=/dev/vdb & cat > /etc/drbd.d/drbd0.res <<EOF resource drbd0 { protocol C; device /dev/drbd0 minor 0; meta-disk internal; on orzo { address 192.168.1.19:7790; disk /dev/vdb; } on borzo { address 192.168.1.20:7790; disk /dev/vdb; } } EOF wait drbdadm -- --force create-md drbd0 drbdadm up drbd0 # on one node only drbdadm -- --clear-bitmap new-current-uuid drbd0 # on both nodes drbdadm primary drbd0 - (done) Configure OCFS2 by running:

# on both nodes apt-get install ocfs2-tools cat > /etc/ocfs2/cluster.conf <<EOF node: ip_port = 7777 ip_address = 192.168.1.19 number = 0 name = orzo cluster = ocfs2 node: ip_port = 7777 ip_address = 192.168.1.20 number = 1 name = borzo cluster = ocfs2 cluster: node_count = 2 name = ocfs2 EOF dpkg-reconfigure ocfs2-tools invoke-rc.d o2cb start /etc/init.d/o2cb online # on one node only mkfs.ocfs2 --fs-feature-level=max-features /dev/drbd0 # on both nodes mount -t ocfs2 -o _netdev,noatime,data=writeback,commit=60,nodiratime /dev/drbd0 /mnt - (done) stress testing

- (failed) I got it to crash with the following in the dmesg output:

root@orzo:/var/log# grep 'Mar 25 22:02:41 orzo kernel' syslog | sed 's/^.\{44\}//' swapper/2: page allocation failure: order:0, mode:0x20 Pid: 0, comm: swapper/2 Not tainted 3.2.0-4-amd64 #1 Debian 3.2.39-2 Call Trace: <IRQ> [<ffffffff810b8543>] ? warn_alloc_failed+0x11a/0x12d [<ffffffff810bb26f>] ? __alloc_pages_nodemask+0x704/0x7aa [<ffffffff812cf547>] ? tcp_v4_do_rcv+0x166/0x323 [<ffffffff810e46d6>] ? alloc_pages_current+0xc7/0xe4 [<ffffffffa00be6f2>] ? try_fill_recv+0x42/0x2d5 [virtio_net] [<ffffffffa00bf1bc>] ? virtnet_poll+0x431/0x4a9 [virtio_net] [<ffffffff81065ead>] ? timekeeping_get_ns+0xd/0x2a [<ffffffff8128ecca>] ? net_rx_action+0xa1/0x1af [<ffffffff81243469>] ? add_interrupt_randomness+0x38/0x155 [<ffffffff8104c060>] ? __do_softirq+0xb9/0x177 [<ffffffff81354bac>] ? call_softirq+0x1c/0x30 [<ffffffff8100f8dd>] ? do_softirq+0x3c/0x7b [<ffffffff8104c2c8>] ? irq_exit+0x3c/0x9a [<ffffffff8100f60d>] ? do_IRQ+0x82/0x98 [<ffffffff8134dc6e>] ? common_interrupt+0x6e/0x6e <EOI> [<ffffffff8109611d>] ? rcu_needs_cpu+0x50/0x1bb [<ffffffff8102b364>] ? native_safe_halt+0x2/0x3 [<ffffffff81014508>] ? default_idle+0x47/0x7f [<ffffffff8100d24d>] ? cpu_idle+0xaf/0xf2 [<ffffffff81070bc6>] ? arch_local_irq_restore+0x2/0x8 [<ffffffff8133fc5c>] ? start_secondary+0x1d5/0x1db Mem-Info: Node 0 DMA per-cpu: CPU 0: hi: 0, btch: 1 usd: 0 CPU 1: hi: 0, btch: 1 usd: 0 CPU 2: hi: 0, btch: 1 usd: 0 CPU 3: hi: 0, btch: 1 usd: 0 Node 0 DMA32 per-cpu: CPU 0: hi: 90, btch: 15 usd: 83 CPU 1: hi: 90, btch: 15 usd: 76 CPU 2: hi: 90, btch: 15 usd: 85 CPU 3: hi: 90, btch: 15 usd: 82 active_anon:9800 inactive_anon:9818 isolated_anon:0 active_file:13212 inactive_file:16151 isolated_file:0 unevictable:0 dirty:4997 writeback:0 unstable:0 free:422 slab_reclaimable:4852 slab_unreclaimable:4337 mapped:244 shmem:20 pagetables:536 bounce:0 Node 0 DMA free:996kB min:120kB low:148kB high:180kB active_anon:1312kB inactive_anon:1352kB active_file:4956kB inactive_file:5408kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15688kB mlocked:0kB dirty:340kB writeback:0kB mapped:4kB shmem:0kB slab_reclaimable:824kB slab_unreclaimable:836kB kernel_stack:80kB pagetables:68kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no lowmem_reserve[]: 0 236 236 236 Node 0 DMA32 free:692kB min:1908kB low:2384kB high:2860kB active_anon:37888kB inactive_anon:37920kB active_file:47892kB inactive_file:59196kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:242380kB mlocked:0kB dirty:19648kB writeback:0kB mapped:972kB shmem:80kB slab_reclaimable:18584kB slab_unreclaimable:16512kB kernel_stack:656kB pagetables:2076kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no lowmem_reserve[]: 0 0 0 0 Node 0 DMA: 0*4kB 1*8kB 0*16kB 1*32kB 1*64kB 1*128kB 1*256kB 1*512kB 0*1024kB 0*2048kB 0*4096kB = 1000kB Node 0 DMA32: 173*4kB 0*8kB 0*16kB 0*32kB 0*64kB 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 692kB 29429 total pagecache pages 1 pages in swap cache Swap cache stats: add 32, delete 31, find 0/0 Free swap = 522108kB Total swap = 522236kB 65515 pages RAM 2876 pages reserved 23111 pages shared 39218 pages non-shared root@orzo:/var/log# - (done) Investigate; see BTS#666021

- (done) Apply workaround by running:

sysctl -w vm.min_free_kbytes=65536 echo "vm.min_free_kbytes=65536" >> /etc/sysctl.conf

- (done) Resume stress testing:

rsync: writefd_unbuffered failed to write 4 bytes to socket [sender]: Broken pipe (32) rsync: writefd_unbuffered failed to write 4 bytes to socket [sender]: Broken pipe (32) rsync: connection unexpectedly closed (382481 bytes received so far) [sender] rsync error: error in rsync protocol data stream (code 12) at io.c(605) [sender=3.0.9] rsync: writefd_unbuffered failed to write 4 bytes to socket [sender]: Broken pipe (32) rsync: connection unexpectedly closed (382482 bytes received so far) [sender] rsync error: error in rsync protocol data stream (code 12) at io.c(605) [sender=3.0.9] rsync: connection unexpectedly closed (17078 bytes received so far) [sender] rsync error: error in rsync protocol data stream (code 12) at io.c(605) [sender=3.0.9]

(This was several rsyncs running in parallel in both directions.) I suspect that the underlying cause is the same: insufficient memory in my VM, but ay least the crash is now in userspace, not kernel space; therefore I think this does not count as a failure.

- (done) Resume stress testing; I have cloned my home onto ocfs2 in order do some real-world testing.

- (failed) I got it to crash with the following in the dmesg output:

Test Fritz!Box with USB volumes

- (failed) Several problems:

- It is slow

- Although ext3 filesystems can be mounted, filesystems are only exported via ftp:// and smb://, both of which “flatten” the underlying attributes (permissions, owner, etc)

Add wheezy support to PDI

MDI is so complicated that I lack enthusiasm to start work on updating it to support wheezy. Therefore I will (temporarily) drop support for several helper scripts, by:

- making the resource helpers selectable

- offering a no-op helper for each resource

This will immediately allow me to do defaulted manual installations (i.e. ones where I just keep pressing ENTER to accept all defaults).

- (done) Port MDI framework to hierarchical/plugin-style:

gnocchi# cd ~/opt/mdi-branch-multi-handler/lib gnocchi# ls -l total 12 drwxr-xr-x 3 root root 4096 Apr 1 13:15 helpers drwxr-xr-x 3 root root 4096 Mar 31 16:14 pxe-images drwxr-xr-x 7 root root 4096 Apr 1 13:15 system gnocchi# ls -l helpers/ total 32 -rwxr-xr-x 1 root root 4765 Mar 31 18:18 choice-helper -rwxr-xr-x 1 root root 12513 Apr 1 13:15 generic-helper -rwxr-xr-x 1 root root 3968 Mar 31 16:14 multi-helper -rwxr-xr-x 1 root root 3636 Mar 31 16:14 nop-helper gnocchi# ls -l system/ total 28 drwxr-xr-x 5 root root 4096 Apr 1 13:00 autoinst drwxr-xr-x 4 root root 4096 Mar 31 21:58 dns drwxr-xr-x 5 root root 4096 Apr 1 13:00 hardware lrwxrwxrwx 1 root root 25 Apr 1 12:33 helper -> ../helpers/generic-helper -rw-r--r-- 1 root root 8833 Apr 1 13:07 helper-hooks drwxr-xr-x 5 root root 4096 Mar 31 22:05 repo gnocchi#

and a session looks like this:

gnocchi# cd system gnocchi# ./helper edit xxx auto-install: [y] VM: [y] virtualisation tool: [virt-manager] engine: [kvm] HVM: [n] arch: [amd64] release: [squeeze] system/repo helper (nop/normal): [normal] install payload: [false] memory (MB): [128] disk (GB): [10] CPUs (1-): [1] gnocchi#

Most of the helpers do very little and the old code needs to be integrated; but the refactoring will make installations more flexible (e.g. create storage and VM resources automatically, but do the installation manually using virt-manager).

- (done) Test/develop a virt-manager-based installation

- (postponed) Test/develop a virsh-based installation with storage being created by a plugin; this will not be done as part of the rollout

- (done) Validate tools on a login server configuration:

- (done) CD ripping: use Asunder as per recommendations

- (done) audio editor: use mhwaveedit as per recommendations

- (done) is paper size correct? No. Now set to A4; check next time

- (done) /etc/pulse/client.conf is still wrong; pulseaudio seems okay now

- (failed) nscd host cache is 221, but I set it to 3301? it is set to 3301, but nscd -g still reports it as 221:

fiori# grep suggested /etc/nscd.conf # suggested-size <service> <prime number> suggested-size passwd 3301 suggested-size group 3301 suggested-size hosts 3301 suggested-size services 3301 fiori# nscd -g | grep suggested 211 suggested size 211 suggested size 211 suggested size 211 suggested size fiori# - (done) is unetbootin working without pzip-full? yes

- (done) can mutt encrypt/decrypt mails or do I need the pgpewrap symlinks? Yes – but I need to stop loading my own ~/.mutt/gpg

- (done) Add Nagios client support to mdi/pdi

Test pacemaker with Meatware

Due to the above-mentioned issues with Corosync/OpenAIS, this configuration did not need to be tested.

Test clustered DNS, clustered DHCP, clustered NIS

- (done) Cluster DNS and DHCP

- (done) Install DNS and DHCP on one system in master mode and document at Configuring DNS services and Configuring DHCP services

- (done) repeat to check instructions correct

- (done) Install DNS and DHCP on a second system in slave mode and document at Configuring DNS services and Configuring DHCP services

- (done) repeat to check instructions correct

- (done) Cluster NIS

- (done) Install NIS on one system in master mode and document at Configuring NIS services

- (done) repeat to check instructions correct

- (done) Install NIS on a second system in slave mode and document at Configuring NIS services

- (done) repeat to check instructions correct

- (done) Test DHCP using a DHCP client (e.g. smartphone)

- (done) Test NIS using a NIS client

Decide hardware configuration for VM/login servers

- DRBD over LVs has proved very reliable (I have used it since Debian Squeeze rollout) but has the disadvantage that I cannot use libvirt storage pools so can’t leverage the virsh‘s storage-related subcommands

- The reliability and simplicity of Ocfs2 was a real revelation! Mkfs.ocfs2’s -T option makes it easy to tune for home directories and VM storage pools

- Using mount -o bind I can present subdirectories of an ocfs2 filesystem using a vol-like interface:

macaroni# ls /vol/misc logs lost+found mail shared svnrepos wikis macaroni# mount -o bind /vol/misc/mail /vol/mail macaroni# ls /vol/mail indexes maildir macaroni# umount /vol/mail macaroni#

Of course, it does not prevent an application (e.g. svnserve) using one sub-directory (e.g. svnrepos) from filling the filesystem and impacting on an application (e.g. postfix) using other sub-directory (e.g. mail), as truly separate filesystems would, but it does allow me to:

- keep a clean interface to the sub-directories

- not waste so much space by adding margins to multiple volumes

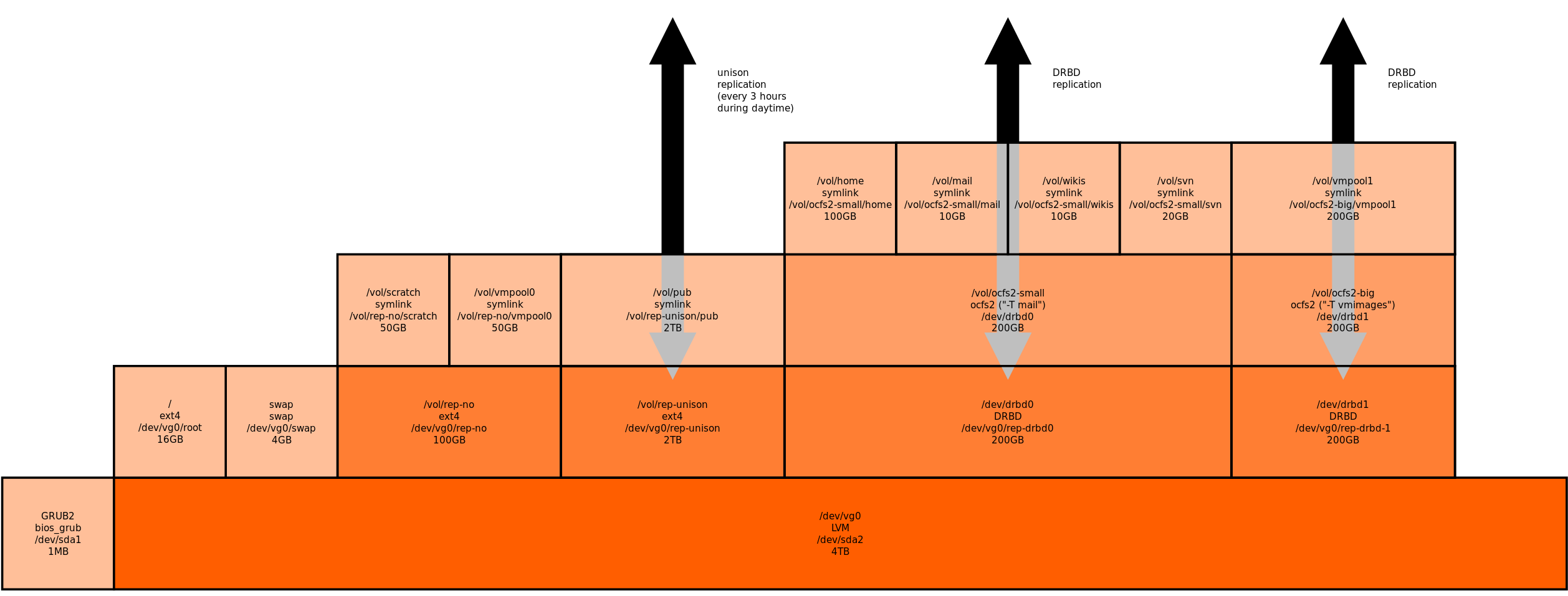

- One possible configuration based on results obtained above is as follows:

I.e. ocfs2+DRBD for replicating VM images, homes and other important small files; Unison for bidirectional syncing of relatively static multimedia content; NFS exporting all “top-of-stack” volumes; bind-mounted for customising export paths (or, put another way: hiding implementation-revealing paths) - This was almost the configuration I used, except:

- the bind mounting was too confusing and introduced ordering dependencies; instead I used symlinks

- the unison synchronisation was a light enough task to allow me to execute it every 3 hours, except at night